In the past few weeks, I was able to attend an Oracle Performance Optimization training put on by my organization. The training was performed by Gaja Vaidyanatha(@dbperfman). Now Gaja was a very cogent and informative trainer. The reason for this post though, is that i finally had the more formal training in the Oracle side to compare to the SQL Server side. In around 2010 or so, i went to a week long Performance Tuning and Optimization class put on by Microsoft. That was conducted by Josh Vickery (https://www.linkedin.com/in/joshvickery) also a blazingly smart guy.

What’s common between Oracle and SQL Server Performance Tuning?

Well hopefully without coming across as a hanger onner, what’s common is how obvious in hindsight the general concepts are. Theory of constraints and basic analysis and scientific method rule the day. Of course the tools vary, but the basic approach is the same. Gather data on where your database operations are spending most of their time, determine the largest blocker. Optimize that, then re-measure. Lather, rinse, repeat. So both formal instructions were not necessarily new frameworks of thinking for someone who’s had analytical tasks before. But like most training, there are tricks of the trade and good mindsets shared

Hints, connection settings, session settings

Both platforms have a framework of database session hints, connection settings, sessions settings. Most of which you should avoid. While the Einsteins (ref: Lhotka) may point out that you can get such and such a performance increase or less code, etc. They come at great cost. #1 the difficulty the next person is going to have figuring out what the heck you were trying to do. #2 entropy: the characteristics of the database will change and/or product upgrades will obviate the need for the custom configuration or actually make it so its harmful. Every time I hear about such thing, i think, what are the odds that I can outthink the engineers who designed either of these database platforms.

I will concede one point that was made. That if the hint or setting provides information that wasn’t previously available to the database platform. e.g. cardinality on a temporary table which can’t be known at design time.

It’s the application, stupid!

Other than obvious database perf issues, it’s usually going to be application design issues that drive performance. Either the application is querying too much data for what it needs, querying the same data repetivily, having long-running applications on the user thread. While databases will continue to evolve and can be made to perform better and better for cheaper and cheaper, the application design owns the user experience.

What’s different between Oracle and SQL Server Performance Tuning?

Some will read that question and say ‘everything’. I’m going to be contrarian and say at the high level, not much. The steps and tools are obviously different. But you still view an execution plan for a given query (either estimated or actual), or use a profiler tool to analyze waits

Tooling

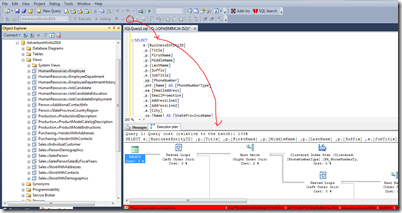

The tooling for Oracle is a lot less mature than SQL Server. Maybe not a lot less, but much less accessible. In SQL Server, through the Management Studio (SSMS), for a query, you can view the estimated execution plan (before actually running the query) or the actual execution plan (after running the query). In Oracle, the provided tools – SQL Developer does have a way to the view the estimated plan. To view the actual plan, one has to set a session variable to ‘trace’, browse to the file system of the server (!), copy down a binary file, pass it to an executable called TKProf, then view the output as a text file. I will say although the SQL Server Management Studio’s graphical view of the execution plan is easier to navigate, the outline view of the execution plan on the Oracle side does have its charms.

In fairness, you might consider Oracle’s tracing/TK prof workflow more similar to SQL Server Profiler and now Extended Events as it includes wait stats and things of this nature. So then you’re back to this being somewhat limited to sysadmins vs. developers.

But to the point of it being less accessible, graning permission to n number of developers to access the file system of a database server is odd or possibly untenable. Luckily for the class I was in, one of our very sharp Oracle DBAs came up with a workaround to expose the default OS trace file location of one of our test servers over HTTP using a simple web server. Otherwise, the 3 day class would have been spent looking at PowerPoint. It would seem that third-party tools such as TOAD would provide this capability, but was unable to confirm that with some regular TOAD users

| SQL Server | Oracle | |

| Estimated Execution Plan | Click the ‘Display Estimated Execution Plan’ button in the toolbar  | Click the Explain Plan button in the toolbar  https://markhoxey.wordpress.com/2012/07/10/obtaining-an-execution-plan/ |

| Actual Execution Plan | Click the ‘Include Actual Execution Plan’ button in the toolbar  | Execute the SQL commands to setup a trace file for yourself alter session set tracefile_identifier='MyTrace'; Turn on tracing alter session set sql_trace=true; Execute your SQL Turn-off tracing alter session set events '10046 trace name context off'; ?Find the file on your servers files system Copy to your hard drive Run the command TKPROF input.trc output.txt |

So while the steps and individual commands are different, the concepts of Performance Tuning and Optimization in both the SQL Server and Oracle platform are very similar. If you can find third party tools or open source tools to get around the rough edges of Oracle’s trace file access, developers should find their optimization skills translate well across products.